On April 29, 2024, the U.S. Department of Commerce (DoC) announced that the National Institute of Standards and Technology (NIST) published four documents on artificial intelligence (AI) 180 days after the Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence. In particular, NIST highlighted that the drafts serve as complementary resources to the AI Risk Management Framework (AI RMF) and Secure Software Development Framework (SSDF) of NIST.

The four documents include:

- NIST AI 600-1 - Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile (the Generative AI Profile);

- NIST Special Publication (SP) 800-218A - Secure Software Development Practices for Generative AI and Dual-Use Foundation Models (NIST SP 800-218A);

- NIST AI 100-4 - Reducing Risks Posed by Synthetic Content (the Synthetic Content Profile); and

- NIST AI 100-5 - A Plan for Global Engagement on AI Standards (the AI Standards Profile).

Generative AI The Generative AI Profile aims to help organizations identify risks posed by generative AI in the format of 13 risks that are novel or exacerbated by the use of generative AI.

SSDF and Generative AI NIST SP 800-218A provides specific recommendations on AI during the software development lifecycle.

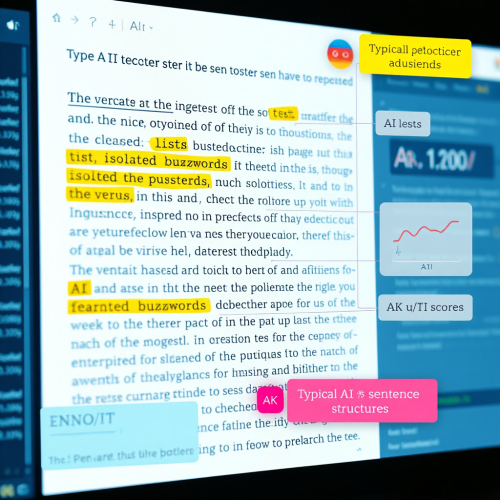

Synthetic Content The Synthetic Content Profile outlines methods for detecting, authenticating, and labeling synthetic content.

Global AI Standards The AI Standards Profile requests feedback on areas and topics that require standardization.

Additionally, the U.S. Department of Commerce announced several new initiatives related to the President's AI Executive Order. The Department has released four draft publications aimed at improving the safety, security, and reliability of AI systems. NIST has also launched a series of challenges to support the development of methods to distinguish between human-produced and AI-produced content.

The NIST publications cover various aspects of AI technology, aiming to enhance security and trust in AI systems. All four documents are initial public drafts, and NIST is seeking comments from the public on each by June 2, 2024.

Sources:

Glossary

- CBRN: Abbreviation for Chemical, Biological, Radiological, Nuclear, referring to weapons or substances within these categories.

- AI: Abbreviation for Artificial Intelligence, referring to the ability of a system or machine to emulate human intelligence.

- SSDF: Abbreviation for Secure Software Development Framework, referring to guidelines and practices for developing secure software.

- Generative AI: Refers to artificial intelligence systems that can autonomously generate content such as text, images, or videos.

- Standardization: Process of creating norms or standards to ensure consistency and compatibility among systems or products.

- Risks: Possibility of loss, damage, or negative consequences associated with an activity or system.

- Synthetic Content: Content produced or altered by artificial intelligence rather than humans.

- Technology: Application of scientific and technical knowledge for practical purposes, including tools and processes.

- Publications: Documents or material printed or digital made available to the public.

- Department of Commerce: U.S. government organization dealing with economic, trade, and technology policies.

- Global Standards: Standards or guidelines accepted internationally.